CSSS 2019 — Cultural Evolution

Our 2019 lectures went from the biological priors of the visual system to meta-cultural knowledge production. A few sources that will enable you to follow up, or go more deeply, into the ideas in play:

1. Three books on cultural evolution: Cognitive Gadgets, by Celia Heyes / Existence, by David Brin / A Culture of Growth, by Joel Mokyr

2. Short introductions to the basic concepts: Information theory for intelligent people / Bayesian reasoning for intelligent people

3. Empirical research into the social and cognitive roles of novelty and transience: Exploration and exploitation of Victorian science in Darwin’s reading notebooks / Individuals, institutions, and innovation in the debates of the French Revolution / Sameness attracts, novelty disturbs, and outliers flourish in fan fiction online.

CSSS 2018 — Social Minds

Lectures happened in 2018 and they were awesome.

CSSS 2017 — Social Creativity

Bayesian Angels and Human Reason (Bicci di Lorenzo, 1433) / John Locke’s “Rules of a Society”

Interpretive Accretion (Heaberlin & DeDeo, 2015)

Our lectures began with the Bayesian Angel: the idea of modeling both human thought, and (by extension) human communication, by reference to the performance of an ideal agent with unlimited memory and computational power. For a gentle introduction to both the philosophy of this practice and the underlying mathematics, see Bayesian Reasoning for Intelligent People, an article that has been growing over the last few years beyond E.T. Jaynes to include discussion of the limits of these methods, with detours through John Searle, John Maynard Keynes, and Charles Sanders Pierce.

Information Theory for Intelligent People is a parallel article covering Entropy, Kullback-Leibler Divergence, and some of the connections between information (as a cognitive and social phenomenon, quantifying the flow of uncertainty) and disorder (as a material property). One of the first places we used information theory was in a study of the Civilizing Process through court transcripts from the British Criminal Justice system. If you’re interested in measuring these quantities from real world data (as we did, for example, to compare Jane Austin and Virginia Woolf), take a look at our open-source Python code THOTH that uses some clever tricks to correct for bias, and an early paper applying these methods to (among other things) Chelsea Manning’s WikiLeaks data. As part of a popular science program, we used Jensen-Shannon Distance to quantify the 2016 presidential debates, covered by Nathan Collins in the Pacific Standard, and by Dave Roos in HowStuffWorks (with nerdy graphs).

To quantify the extent of pattern making and pattern breaking in the various systems we had under study, we used of Kullback-Leibler divergence. We think this works for a lot of reasons—not just because we know it works at lower levels of the visual system (based on Itti & Baldi’s pioneering work), but because we were able to use it to study related higher-order phenomena: in particular, the reading patterns of (to put it mildly) an exemplary information seeker: Exploration and exploitation of Victorian science in Darwin’s reading notebooks [in the journal Cognition; arXiv link]. Our work on the social reception of novelty is in press—stay tuned!

Our second lecture, Social Minds and System Design, was accompanied by a handout—“Rules for Social Minds”, from John Locke (on rules for his non-drinking drinking club) and Immanuel Kant (on “tasteful dinner parties”) to the Santa Fe Quakers, Wikipedia, and Less Wrong. I’d be delighted to see more examples; if you don’t know MeatballWiki, it’s like TV Tropes, but for political philosophy—begin anywhere, say here, and keep clicking.

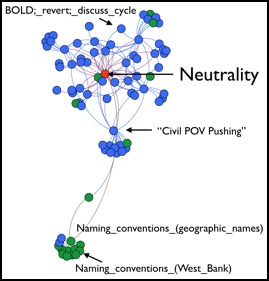

To build the Markov Models of Wikipedian conflict we used SFIHMM, a set of optimized (and parallelizable) C code to build Hidden Markov Models from timeseries, use AIC to determine state size, and extract substructure from spectral clustering. The paper describing the emergence of these highly-structured conflict subspaces, and attempting to connect these to exogenous factors is Conflict and computation on Wikipedia: A finite-state machine analysis of editor interactions. Meanwhile, Bradi’s work on Wikipedian “Norm Bundles”, including both descriptive statistics of this evolving network of norms, and work connecting it to the Iron Law of Oligarchy, is The Evolution of Wikipedia’s Norm Network, which appears in a popular version on the Christian Science Monitor’s Complexity Series as the Source Code of Political Power.

Major Transitions in Political Order, a synthesis of much of the work presented in our two lectures but from the perspective of social feedback, now appears as a chapter in From Matter to Life: Information and Causality (Cambridge University Press; edited by Sara Imari Walker, Paul C.W. Davies, George Ellis).

Some popular writing on social creativity: Chasing Pattern, based on a 2016 popular lecture at the London Barbican, on patterns and human social behavior; thy commitment, decorated with Joy, begins to speak briskly, on the possibility of poetry-writing AIs; on our failures to describe, and model, the unpredicted in human behavior, in 43 Visions.

CSSS 2016 — Social Minds

Lectures happened in 2016 and they were awesome.

CSSS 2015 — Social Minds

Babylonian Talmud (Vilna Edition) / Network of Euclidean Propositions (Nicole Miller, St. John’s College, c.2003)

Wikipedian Moral Network (Heaberlin & DeDeo, 2015)

Lecture one began with Group Minds and the Case of Wikipedia, which provides (1) an introduction to the idea of coarse-graining a finite-state machine, and (2) an explicit game theoretic model of conflict and collaboration. Collective Phenomena and Non-Finite State Computation in a Human Social System is the first paper in the series; it established the reverse-Broken Windows phenomenology for Wikipedia, as well as the non-finite nature of the underlying process. Optimal high-level descriptions of dynamical systems provides an extensive account of how we (as scientists) might think about coarse-graining; look there for references to other work, including that of Jim Crutchfield. In the past, I’ve devoted an entire lecture to the mathematics of coarse-graining; scroll down to earlier years for more links.

We began lecture two with an account of coarse-graining in the wild. The Evolution of Lossy Compression, with Sarah Marzen, describes how evolution drives perception to a boundary between a high- and low-fidelity regime; this paper is in submission, and we welcome comments and ideas on how it might be applied. A basic refresher on the concepts needed to understand that paper can be found in Information Theory for Intelligent People; rate-distortion theory is covered in Cover & Thomas (and see also our references; a paper on the related Information Bottleneck method is particularly good).

Social feedback and the emergence of rank in animal society, on the emergence of avian politics with Elizabeth Hobson, is available as a preprint, and contains many more details for the curious on our methods and tools. The work on IU undergraduates remains for amusement only (and was very well picked-over in lecture; thank you for entertaining it).

Students interested in other empirical work at the intersection of culture, norms, and cooperation might look at The civilizing process in London’s Old Bailey, on tracking the evolution of norms against violence; Dynamical structure of a traditional Amazonian social network, for studies on reciprocity and kin in an indigenous people, and a pair of papers on animal conflict, Evidence of strategic periodicities in collective conflict dynamics, on timescales of conflict, and Inductive Game Theory and the Dynamics of Animal Conflict, on agent-based theories of the underlying decision-rules in play. A lovely paper, Sparse code of an animal society, by collaborators of mine, draws on (a different form of) lossy compression to understand the same data.

Everyone loves the Axial Age. I first encountered it in a series of essays, The Axial Age and Its Consequences, edited by Robert Bellah and Hans Joas, and in celebration of Bellah’s magnum opus, Religion in Human Evolution. Charles Taylor contributes an essay to the edited volume; his book, A Secular Age, provides an account for those interested in projecting out towards the future. The Axial Age as a concept plays an increasingly larger role in big picture accounts of cultural evolution; notably in David Graeber’s wild (in all senses of the word) book Debt: a 5000 Year History.

Institutional Crowding-Out is a term I first learned from Sam Bowles in his book on Microeconomics; his book A Cooperative Species: Human Reciprocity and Its Evolution, together with Herb Gintis, provides a account of the emergence of some (but I would argue, and they would agree, not all) norms of fairness and common good. Herb’s book The Bounds of Reason provides an ambitious attempt to draw on the genius of economics to go beyond simple financial exchange and into the domain of culture and human social life.

Major Transitions in Political Order, a synthesis of much of the work presented in our two lectures, is forthcoming as a chapter in From Matter to Life: Information and Causality (Cambridge University Press; edited by Sara Imari Walker, Paul C.W. Davies, George Ellis).

Our work on Wikipedia epoch structure is still in preparation—so many null signals are hard to organize!—but will be available as a pre-print soon. SFIHMM, code to do high-performance fitting of finite-state machines to time-series data, is available on request. Our work on moral networks is also in preparation. In both cases (and, indeed, for everything and anything discussed here) I and my collaborators are thrilled to hear comments, suggestions and feedback. If you scroll down to earlier years, you can trace the origins of many papers above; I am exceedingly grateful for the feedback and engagement I get each year from the school, and encourage you to be in touch.

CSSS 2014 — Other Minds / Group Minds

Our first lecture, other minds, first covered explicit examples of how to use basic tools in information theory (entropy, Jensen-Shannon divergence, KL divergence) to characterize features of a system of direct cognitive significance.

Glancing through Information Theory for Intelligent People and Bayesian Reasoning for Intelligent People will refresh your memory of some of basics of information theory and its foundations in Bayesian reasoning and inference—or just provide a new perspective on things you already know well. E.T. Jaynes’ Probability Theory is the canonical deep reference for the Bayesian side; Cover and Thomas for Information Theory.

If you wish to try some of these methods on your own systems, you will need to estimate information-theoretic quantities from data—e.g., you will need to estimate the “true” entropy of an underlying distribution from a limited number of samples. This is a hard problem that we did not explicitly address. See our 2013 paper in Entropy, and public python code THOTH for an introduction, and a nice and conceptually simple solution. Finally, if you enjoyed the toy examples from J. S. Bach, John Milton, and the US Congress, you can also take a look at a “more serious” application of these ideas in our 2014 Civilizing Process paper, which used information theory to study the evolution of traditions of prosecution in the British criminal justice system (see also a high-level popular account in Nautilus).

We then introduced the Wikipedia problem of how to explain, in a game theoretic fashion, the cooperative phenomena to hand. My study of this system began back in 2012, when I needed an emergency toy example for my CSSS lectures and nothing else worked (see below). A 2013 paper studied the system from a different angle—the computational—and also includes details on the system itself.

For an excellent introduction to game theory that doesn’t get bogged down following out historical dead-ends, two ideal books for the mathematically inclined are Multiagent Systems: Algorithmic, Game-Theoretic, and Logical Foundations, and the Bounds of Reason: Game Theory and the Unification of the Behavioral Sciences.

Our second lecture, group minds, began with a brief introduction to the question of emergence and reductionism; see the notes for lecture one, CSSS 2012 below for references and further reading.

We covered the use of Maximum Entropy methods for building scientific explanations of complex phenomena; the original paper that introduced this account is Jaynes’ 1957 paper Information Theory and Statistical Mechanics. One of the clearest accounts of the use of Maximum Entropy in non-physical systems is John Harte’s Maximum Entropy and Ecology.

CSSS 2013 — Emergence / Reductionism

We focused on (1) models of cognition (Bayesian and Information-Theoretic) and (2) coarse-graining and the nature of explanations for complex and collective phenomena, including the notion of an “effective theory”. One might consider (1) as the raw material of an effective theory of social and biosocial phenomena; it provides as well the clearest introduction to the notion of coarse-graining.

A third lecture, with the SJC Graduate Institute, considered the implications of this scientific material for the philosophy of science and for the question of reductionism, hierarchies of fields, and whether “we’re all just fermions and bosons”.

Thank you all very much for your engagement with the material, and your many questions and comments during all three talks.

A collaborative paper, on the uses of the coarse-graining axioms (and novel techniques for preserving them in estimation) is out recently in the journal Entropy. See also THOTH, an open source set of code for estimation of quantities.

We talked about E.T. Jaynes’ Probability Theory (a very clear introduction to why one might consider probability a “model” for thought). On the information theory side, Cover and Thomas is such a standard that what it covers is often considered the definition of (the domain of) information theory.

Bayesian Surprise is introduced (with references to the papers discussed in the talk) at this USC site.

Gigerenzer’s edited volume introduces the concept (due originally to Herb Simon of Carnegie Mellon) of Bounded Rationality. For a critical response, which emphasizes the limits of non-Bayesian accounts in cognitive science, you might consider Chater & Oaksford’s collection.

You can read more about Ration Bowls at this GMU site (I draw the account of the bowl-copying process from Henry Wright).

The joint SFI and St. John’s College lecture at the Graduate Institute on reductionism made heavy use not only of finite-state machines and the Chomsky hierarchy (see previous years), but also Godel’s Incompleteness Theorem. An ideal introduction to the latter is by Nagel and Newmann (reissued with a new introduction by Douglas Hofstader).

Despite the (regrettable, but occasional) physics-envy, Every Thing Must Go is a fantastic piece of philosophy, and well-worth reading. James Ladyman (a co-author) and Karoline Wiesner (SFI external faculty) are collaborating on a new book, “What is a Complex System?” for the Princeton Complex System series.

The discussion referenced, among other things, Donald Davidson’s Anomalous Monism argument from the paper Mental Events.

We did not get to game theory and common knowledge. Seen in the correct light, game theory allows one to integrate probabilistic and information-theoretic accounts with more informal accounts of “what you know”. A popular article of mine, the Coin Toss and the Love Triangle, may be of interest; see also Chwe’s Rational Ritual, and collaborative work with David Krakauer and Jessica Flack on animal behavior.

A preprint of the paper on the Wikipedia work is available. Many other references for code, books and papers can be found in sections below from previous years.

CSSS 2012 — Emergence

Update: A preprint of Evidence for non-finite-state computation in a human social system, extensively discussed and argued over in the first lecture, is now online.

Update: all three Emergence lectures are now online, on both youtube and iTunes U. Lecture One ; Lecture Two ; Lecture Three.

Update: more philosophical accounts of emergence may be interested in this video from Sean Carroll’s Moving Naturalism Forward workshop (more info).

We gave two accounts of emergence: one dealing largely with the properties of a system under coarse graining, the other dealing with the phenomenon of symmetry breaking.

Effective Theories for Circuits and Automata (free copy) is the guide for the first one, and makes a case for the use of coarse-graining and renormalization (Lecture One) in computational/functional systems that are not governed by a spatial organization (Lecture Two).

The second is much more widely discussed, and has made its way into the literature beyond the physical and mathematical sciences.

The Central Limit Theorem as an example of Universality (skip Sec. 3.4 on Lattice Green Functions, unless you live in a crystal). Aggregation (i.e., considering a system with more and more agents) One Particle and Many (skip Sec. 2.3 unless you are near zero Kelvin). Both from Leo Kadanoff's readable (if you have some background in physics, chemistry or biochemistry) book Statistical Physics: Statics, Dynamics and Renormalization.

The third “universality class” — i.e., limiting distribution — that we discussed in our cartoon introduction (in addition to the log-normal, for languages, and the Fisher log-series, for ecosystems) is introduced in a Nature paper by Bohorquez, Gourley, Dixon, Spagat and Johnson in 2009.

The failure of Black-Scholes is discussed from the Mandelbrot point of view in many places, including The Misbehavior of Markets. The somewhat less media/physicist friendly account by Warren Buffet on how the non-stationary variance of the market functions is also worth reading, from his 2008 letter to shareholders (page 19).

Robert Batterman's book, Asymptotic Reasoning in Explanation, Reduction, and Emergence has a readable and inspiring account of "explanation" and the role of effective theories. (Note that your lecturer does not follow his later account of emergence, which we discussed in a very different fashion).

Our account of coarse-graining and renormalization group flow draws (hopefully clearly) from the very technical literature. One nice place to look if you have a physics mind-set is Michael Fisher's article, Ch. IV.8, in Conceptual Foundations of Quantum Field Theory (which includes a number of amazing articles, if you are game, on renormalization, effective theories, and emergence).

Lecture 2 (Monday afternoon)

Techniques for finding the Bayesian best-match probabilistic finite state machine (a.k.a., Hidden Markov Model) for a particular string of observed behavior are described in Numerical Recipes, 3rd. Ed. (Press et al). Chapter 16.3. Tapas Kanungo has a nice implementation of the E-M algorithm that is (somewhat) industry standard for the simple case.

We played Contrapunctus XIV in an arrangement for strings by the Emerson String Quartet. Then we played it again in MIDI form in Mathematica, then we truncated to the top two voices, and shifted both into a single octave to arrive at the process with only 104 output symbols (some of the 12x12 possible chords Bach did not use). Then we tried to fit this process by a 12-state Hidden Markov Model. It did not sound very good, and this allowed us to discuss the limitations of finite state machines for processes with multiple timescales, hierarchies of interacting processes, and systems of greater computation complexity (e.g., the parenthesis-matching game).

You may want to know how far Machine Learning can be pushed to produce "Bach-like" music, and whether (approximations to) higher-complexity processes might improve it. This is discussed in charming detail in Baroque Forecasting, by Matthew Durst and Andres S. Weigend, in Time Series Prediction, a volume from early meeting at SFI.

Group Theory, and the extension of the Jordan-Holder decomposition of groups to semigroups (i.e., the more general class of finite state machines with irreversible operations, such as the ABBA machine), forms a central theme of our discussion. Some very charming introductions to group theory exist (if one is not able to attend Douglas Hofstadter's classes at I.U.!) -- one perhaps suitable for visual thinkers is Visual Group Theory.

The Krohn-Rhodes theorem, which proves the consistency of a hierarchy of coarse-grainings for finite state machines, gets complicated. References to excellent papers by Christopher Nehaniv, Attila Egri-Nagy, and others can be found in the Effective Theories paper referenced above. The "Wild Book", photocopied and passed around in the 1970s, that made the case for the importance of the theorem, is now re-issued in a revised and edited version as Applications of Automata Theory and Algebra: Via the Mathematical Theory of Complexity to Biology, Physics, Psychology, Philosophy, and Games (just in case you thought there was something it might not apply to).

Lecture 3 (Tuesday morning)

Our account of symmetry breaking as a canonical form of emergence is inspired by the foundational article More is Different (free copy), by SFI co-founder Phil Anderson.

The discussion of symmetry breaking in turbulence as one alters the control parameter is described elegantly in the beginning of Uriel Frisch's Turbulence.

Order Parameters, Broken Symmetry, and Topological Defects, by James P. Sethna is a readable and clear account of how this plays out in physics (that gets very advanced by the end!)

Our major example of a phase transition in a social/decision-making system was that found for the Minority Game when agents build strategies out of a finite-history list, from a paper by Damien Challet and Matteo Marsili (free copy). An excellent summary of what we know about the humble El Farol bar is at Minority Games: Interacting Agents in Financial Markets.

CSSS 2012 — Statistics and Stochastic Processes

Overall book: David J.C. MacKay, Information Theory, Inference, and Learning Algorithms.

Lecture 1 (Thursday)

E.T. Jaynes' Information Theory and Statistical Mechanics (free copy).

Elliott W. Montroll On the entropy function in sociotechnical systems. Focus on the Sears-Roebuck Catalog; we will read this critically in class. Optional: Thermodynamic Treatment of Nonphysical Systems: Formalism and an Example (Single-Lane Traffic) (with Reiss & Hammerich; see touching "Note in Closing").

Tkacik, Schneidman, Berry, and Bialek. Ising Models for Networks of Real Neurons. Optional (but compelling; see back to Montroll's PNAS paper, top left of pg. 7841): Mora and Bialek, Are biological systems poised at criticality?

Lectures 2 & 3 (Friday)

Null models & significance testing. DeDeo, Krakauer & Flack. Evidence of strategic periodicities in collective conflict dynamics (free copy). Optional: Weidmann & Toft. Promises and Pitfalls in the Spatial Prediction of Ethnic Violence (a critical examination of the claims in this Science article).

Parameter Estimation and Bayesian Reasoning. Clauset, Shalizi & Newman. Power-law distributions in empirical data.

Model selection. We focused on the Evidence Ratio (a MacKay favourite) and how it is approximated by a standard technique in the literature, the BIC (Bayesian Information Criterion). See also Cosma Shalizi on Methods for Selection. Optional (to give an example of how to argue for a completely different criterion) Multi-Model Inference (AIC).

Finally: David Deutsch on Scientific Argument.

Data and analysis code for the seating of students in the June 14th lecture, including basic code to implement null models for gender and field distribution, is available here.

Additional material on the thermodynamics of computation. Bennett, the Thermodynamics of Computation—a Review (free copy). Landauer, Computation: a Fundamental Physical View (free copy). Anthology of articles: Maxwell's Demon 2.

CSSS 2012 — Computation in Natural Systems

Cris Moore's Lecture notes on automata, languages, and grammars covers, elegantly, all of the basic automata concepts in the lecture, and much more besides. A supplement to The Nature of Computation.

Jim Crutchfield & Karl Young's paper, Computation at the Onset of Chaos provides a detailed and compelling account of (among other things) how a naturalistic process (in this case, the logistic map) violates the bounds of both the regular and context-free grammars.

For an introduction to the connection between (semi)groups and the regular grammars, as well as a preview of the Emergence module, see Effective Theories for Circuits and Automata.

Data on the edit histories of the George Bush wikipedia article, along with ruby code to read in and otherwise play, can be downloaded here.

CSSS 2011 — Emergence

The worksheet for the June 28th practical is available. It introduces mean-field solutions for collective phenomena, putting dynamics on the Boltzmann distribution, and the role of symmetry breaking in the formation of topological defects, with an eye towards problems in social and biological complexity. See the wiki for other slides, notes, and readings.

Lecture 1 (handwritten notes; slides) covered some basics of group theory (we showed, for example, how Z3 was “hidden” inside of S3). Group theory falls under the general category of (abstract) algebra. It is a rich subject, with many excellent introductions, including some unusual ones that may be more approachable for non-mathematicians. We also covered (gently) symmetry breaking in the Navier-Stokes equation — more discussion can be found in Uriel Frisch’s book Turbulence. Topological defects have an interesting theory behind them, and one potentially accessible introduction to homotopy theory is Cosmic Strings and Other Topological Defects. A chapter in Jim Sethna’s book is a great intermediate-level read.

Lecture 4 (handwritten notes; slides) introduced the Chomsky hierarchy, as well as “Jim’s Bestiary,” from Calculi of Emergence, to show how our ideas about computation have enriched since Aspects of Syntax. You might look to Ray Jackendoff’s book Language, Consciousness, Culture for where linguistics has gone, and might go, since. A technical reference is Introduction to Formal Languages; these concepts are also covered in graduate-level computer science texts.

The Krohn-Rhodes decomposition theorem is covered in an unusual (but compelling) fashion by the “Wild Book”, now reissued; papers by Nehaniv, Egri-Nagy, and Maler are excellent modern sources, and the latter two cover the Holonomy decomposition in detail. Attila’s package for GAP allows computation. It was mentioned that discussion of Krohn-Rhodes had fallen by the wayside, but early influential texts, including Hartmanis, considered it central. The Markov structure of the Bengalese finch song appears in Katahira et al.

Effective Theories for Circuits and Automata is now up on the arXiv.

Ray Jackendoff is external faculty, and Juris Hartmanis science board, at the Institute. Nick Metropolis (whose algorithm allows us investigate both equilibrium distributions, and non-equilibrium phenomena such as walls and vortices) and Phil Anderson (author of “More is Different”) were both founders.

Dream of the Unified Field is collected in the book of that name. The Monster group is distinct from monsters representing a group (here Feist is an input letter, or terminal symbol).

CSSS 2010 — the Physics of Reasoning

The handwritten notes for lecture one and lecture two cover most of the material. They are solely for consumption by attendees — please alert me to any errors that might have crept in!

The underlying paper for most of the first lecture is E.T. Jaynes’ 1957 Information Theory and Statistical Mechanics. For those who are interested in computing more carefully the entropy of fusion for the ice to water transition (i.e., where the rest of the 10 trillion Gigabytes come from), Yu.V. Gurikov’s 1971 paper on the Configurational Entropy of Water is fun. Alan Guth’s office is further described here.

In the second lecture, we covered the connected correlation functions (a.k.a., the cumulants, semi-invariants, and so forth).

We discussed in detail work on neurons involving Bill Bialek, Elad Schneidman, Gasper Tkacik and many others — in particular, the emergence of higher-order correlations.

For the biologically minded, there is their Nature paper that covers the experiments and sketches the theoretical aspects; for the more physically minded, Ising Models for Networks of Real Neurons (followup here). Finally, an information theoretic paper on cumulants appears in Physical Review Letters. Our own work, on animal behavior, we discussed briefly; the first paper on that appeared in April in PLoS Computational Biology.

Another aspect — which we didn’t get to — was ways to compute the partition function, Z, for the Ising model on an arbitrary graph (arbitrary β matrix, in the language of the lectures). This is much harder than the continuous Gaussian case. The (approximate) solutions for the Ising case are a source of mathematical beauty — as in related fields such as quantum field theory (which tends to talk in terms of continuous-valued states, and to introduce ϒijk terms instead of enforcing discreteness).

Series Expansion Methods for Strongly Interacting Lattice Models has a nice introduction to some of the methods, many of which work (though more slowly) on complex (or simply complicated and inhomogenous) graphs. Only a limited number of discretized problems can be solved exactly; Z on the 2D lattice, for example, requires nearly superhuman powers; Exactly Solved Models in Statistical Mechanics has the details.

If you really get into these questions for regular lattices (and their continuum limits), and have a taste for mathematics, the book by James Glimm and Arthur Jaffe, Quantum Physics, discusses the “polymer expansions,” which include the Feynman diagrams from the final slide as well as the linked cluster expansion used to compute efficiently in the Ising model. Arthur Jaffe is a member of SFI’s science board.